Exhibit evaluation for

Children’s Exhibits

The Kirby Science Center Experience.

By

John A. Veverka

Why Ask?

Evaluation has long been a part of any interpretive planning strategy, especially for

interpretive center or museum exhibits. When you consider the costs of exhibits to

agencies (estimated at $300 per square foot of exhibit floor space) you would think that

before the exhibits were delivered the agency would want to make sure they

"worked", i.e. accomplished the objectives they were designed for. Unfortunately

this evaluation process rarely happens and many exhibits quietly "fail" to make

any contact with visitors.

I was recently a part of the Derse Exhibits team to plan, design, build and

"evaluate" exhibits for the new Kirby Science Center, in Sioux Falls, South

Dakota. They had three empty floors and wanted top quality science exhibits to fill the

building – a $3,000,000.00 project. Part of the total project the client wanted was a

thorough evaluation of exhibits to make sure that each exhibit accomplished its specific

interpretive objectives. This short article will summarize what and how the evaluation

took place and what I and the team learned from this "wrenching" experience

called evaluation.

What were the exhibits supposed to do?

Before you can evaluate anything you have to first know what it was supposed to

accomplish. Part of the total exhibit plan was an "Interpretive Exhibit" plan.

This consisted of each individual exhibit having – in writing – a specific

concept the exhibit was to present, and specific learning, behavioral and emotional

objectives each exhibit was held accountable to accomplish. We would later evaluate the

mock up exhibits against those stated objectives.

The evaluation strategy.

For this evaluation strategy I developed several different evaluation methods to be

used for the total evaluation. The evaluation would take approximately 4 weeks to do. We

set up draft/mock- up exhibits in the warehouse of Derse Exhibits – evaluating

approximately 15 exhibits each week, representing six different science subject areas. We

then arranged with local schools for teachers to bring in their classes to "test the

exhibits" for us. We would test each set of 15 exhibits over the course of one week.

The evaluation strategies included:

A written pre test and post test. We brought in school busses of children from

different schools to be our "audience" for the evaluation. Before being allowed

to use the exhibits each group took a short written multiple choice and true/false pre

test relating to each exhibits objectives. After the pre test the children could then go

and "use" the exhibits. After spending about 45 minutes with the exhibits they

came back for a written post test. We wanted to see if there was any change in what they

knew about the tested science concepts from before seeing and using the exhibits –

and then after they interacted with the exhibits.

Observational Studies. This part of the evaluation used a trained observer

stationed at each exhibit to simply watch/record what the children did or didn’t do.

This told us a lot about things like "instructions", graphic placements, and

subjects that children did and didn’t have any interest in.

The quick fix – and fix – and fix again.

Essentially our plan was to have two groups of children test the exhibits on Monday of

each of the four weeks. We would then analyze the test results and our observational

results – make any changes to the exhibits on Tuesday – bring in two new groups

of students on Wednesday – make any more corrections on Thursday – do one final

test on Friday – make any final adjustments, and then ship out the completed exhibits

from that weeks testing on to the Science Center over the weekend. We would then repeat

the evaluation process the next week for 15 different exhibits.

What we found out – Oh the pain!

What did we learn from this experience? We learned that if we had not done the testing

the great majority of the exhibits we "adults" planned would have been failures!

Virtually EVERY exhibit we tested had to be "fixed" in some way. Here are a few

examples of some of the things we observed:

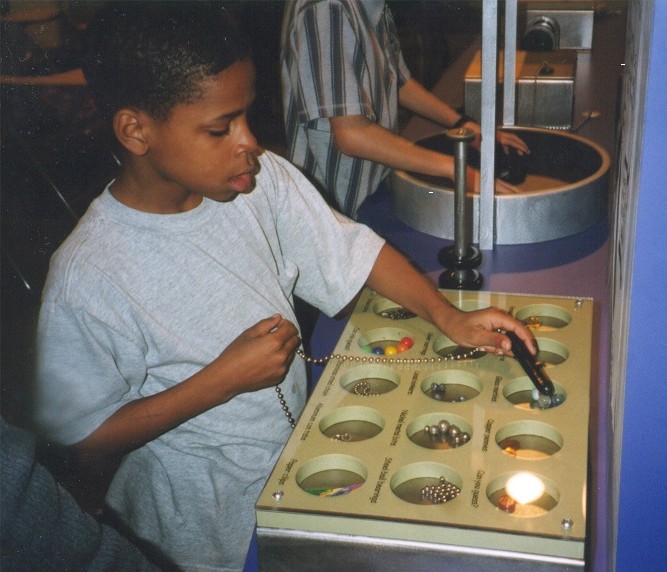

For example, with this exhibit on "magnetism"(above) you were directed (left

photo -arrow) to move the magnet on the chain UNDER the objects, the magnetic items

would then move. Not one child followed these directions. They only used the magnet from above!

They wanted to see the magnet on the chain interact directly with the item in the exhibit

(right photo). We fixed this by changing the directions and raising items in each

container so magnetic objects would react with the magnet held from above. We found that

children found any written directions to be "invisible". In 98% of the cases the

children did not look at or read "any" directions unless an adult suggested they

do so. If they had to read complex directions to do the activity – they usually left

the activity.

Another interesting example of what and how children think was our "how bats find

food" exhibit (below).

We also were able to test the construction of the exhibits themselves, and some of the

exhibit tools. For example, our "indestructible" microscopes (photo below) didn’t

last a week!

What we learned.

This month long evaluation process taught us all a lot, the most important of which was

that if we hadn't done the evaluation we would have built exhibits using adult ideas of

how children learn that children would NOT have learned from.

Some key points:

From the pre-post tests, we found that there were some subjects students already had

good concept level understanding of – pre tested at a 80% correct response or higher

on the written test; and some areas they had a very poor understanding of – with

correct responses on the pre test of 50% or less. We did find that when comparing the pre

test and post test results, there were often increases in correct answers on the post

tests, depending of the individual exhibits. So the exhibits were generally working –

but the initial post test improvements were generally very weak, may be only 5-15%

improvements on post tests at the start of the week (Monday testing). But by Friday, after

the exhibits had gone through many changes in design, instructions presentation, and

concept presentation, we were at an average of 80% comprehension or better on post testing

for most exhibits. By doing this formative evaluation through out the week of

testing, we were ending up with "very good to excellent" exhibits as far as

having their educational objectives accomplished at a 70% level or higher (our goal).

We found that EVERY exhibit we evaluated over the 4 week period (about 60 exhibits) had

to have some "improvements". Some exhibits just needed a little fix – such

as the addition of a label that said "push the button" (otherwise the button to

start the activity would not be pushed), to some exhibits needing a major re-design.

We found that children did not even look at, let alone read any "written"

instructions. But we did have success in redesigning instructions in cartoon or

"comic book" formats – more visual presentation instructions. The

instructions themselves had to look fun or interesting. For many of these exhibits

to be used most efficiently would require a docent, science educator or teacher to help

facilitate and direct the learning activity. But the exhibits did work effectively on

their own after evaluation driven re-designs. When our researchers facilitated the

learning – explaining directions, etc. the exhibits worked wonderfully.

The design team and the client all learned that the ONLY way you will know for sure if

you have a "successful" exhibit – not just a pretty exhibit – is to

evaluate it with your intended target market group. The visitors will tell you if your

exhibit is successful in communicating with them or not – if you ask them!

Summary

This short article only begins to touch on some of the many complex educational issues

and design challenges we encountered by doing this evaluation. My goal was to provide an

introduction as to why evaluate, and how the process helped us to finally design and build

exhibits that were really educationally successful. We believe that based on all that we

learned about exhibit users for this museum, that evaluation for any exhibit project is

not an option but a requirement for true exhibit success.

References

Veverka, John A 1998 Kirby Science Center Exhibit Evaluation Report. Unpublished

report for the Kirby Science Center, Sioux Falls, South Dakota.

Veverka, John A. 1994 Interpretive Master Planning. Acorn Naturalists, Tustin,

CA.

Veverka, John A. 1999. "Where is the Interpretation in Interpretive

Exhibits". Unpublished paper available on line at: www.heritageinterp.com (in our

LIBRARY).

John Veverka

John Veverka & Associates

www.heritageinterp.com

jvainterp@aol.com